News

-

![c' dots illustration]()

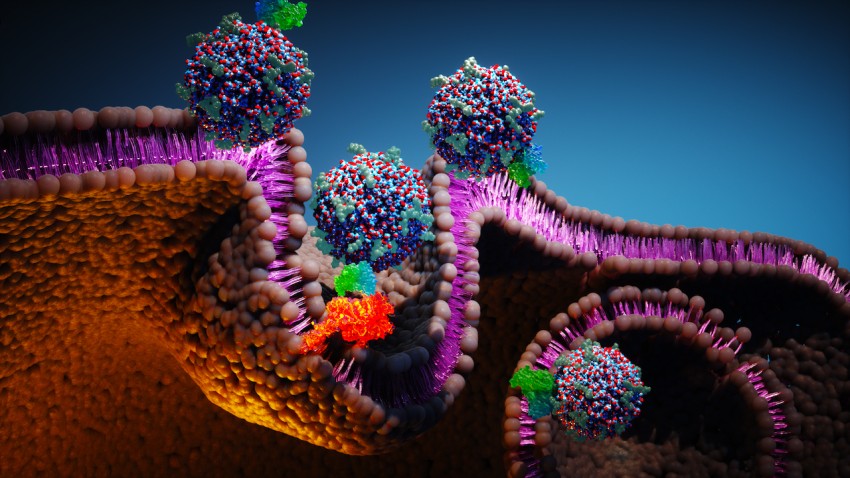

Cornell-developed particles supercharge cancer immunotherapy

A class of ultrasmall fluorescent core-shell silica nanoparticles developed at Cornell is showing an unexpected ability to rally the immune system against melanoma and dramatically improve the effectiveness of cancer immunotherapy.

-

![Light traffic on a New York City street]()

Congestion pricing improved air quality in NYC and suburbs

Cornell researchers tallied the environmental benefits of New York City’s congestion pricing program and found air pollution dropped by 22% in Manhattan, with additional declines across the city’s five boroughs and surrounding suburbs.

-

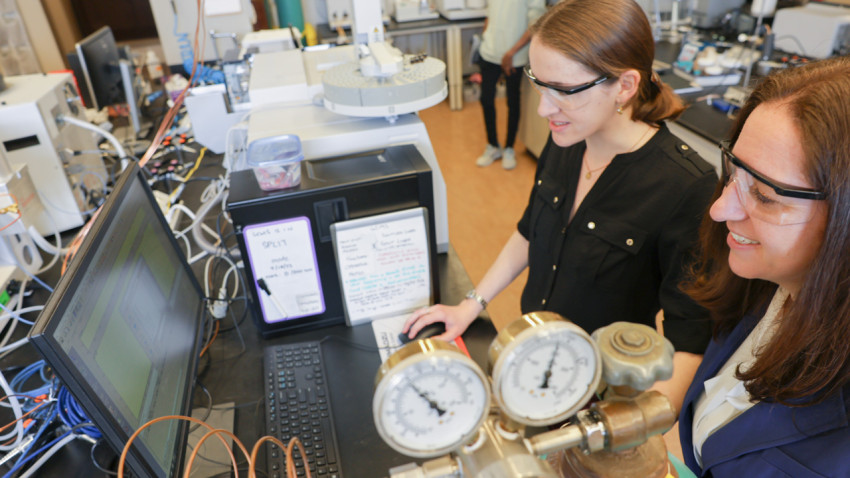

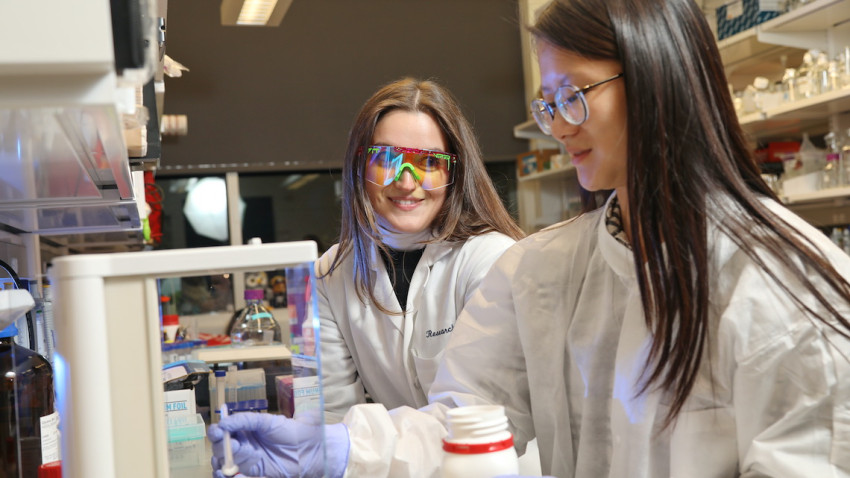

![Postdoctoral researcher Rebecca Gerdes, Ph.D. ’24, (left) and Jillian Goldfarb, associate professor of chemical and biomolecular engineering, led an interdisciplinary team that determined that organic residues of plant oils are poorly preserved in calcareous soils from the Mediterranean.]()

Ancient dirty dishes reveal decades of questionable findings

An interdisciplinary team of researchers determined that organic residues of plant oils are poorly preserved in calcareous soils from the Mediterranean, leading decades of archaeologists to likely misidentify olive oil in ceramic artifacts.

Latest Awards and Recognition

View all-

Bizyaeva receives outstanding paper award

Anastasia Bizyaeva, assistant professor, has received the 2025 George S. Axelby Outstanding Paper Award,…

December 15, 2025

-

Martínez named distinguished member of the ACM

Jose F. Martínez, the Lee Teng-hui Professor of Engineering, has been named a 2025 Association…

December 12, 2025

-

Lastovicka receives President’s Award

Chris Lastovicka, assistant director of digital strategy for Cornell Engineering marketing and communications, received the…

December 4, 2025

-

Three research teams receive grants

Three Cornell engineering research teams have been chosen as recipients of AI and Climate Fast…

December 1, 2025